ChatGPT Is Not “AI”, It’s a Product.

Somewhere along the way, we collectively made a mistake. We started calling ChatGPT “AI.”

ChatGPT is impressive. It’s useful. It’s a breakthrough in user experience.

But it is not the definition of AI, and treating it as such is actively holding back serious businesses, governments, and European innovation. ChatGPT is a consumer-facing interface on top of a proprietary model, hosted in hyperscale cloud infrastructure, optimized for generalized use cases and Silicon Valley economics.

Real AI , especially for B2B, regulated industries, and strategic autonomy looks very different. And Europe, quietly but deliberately, seems to understand this better than most.

AI Is Not a Chatbot

Let’s start with a simple reframing.

AI is not:

- A single large language model

- A chat interface

- A subscription SaaS tool

- A black box trained on unknown data

AI is:

- A capability embedded into systems

- A decision-support layer on top of your data

- A workflow accelerator

- A reasoning and retrieval engine aligned with business context

ChatGPT is a general-purpose language model service. AI, in practice, is an architecture.

The Real Value of AI is the Data

For businesses, the model itself is rarely the differentiator.

What matters is:

- Your internal documents

- Your processes

- Your customer history

- Your operational data

- Your domain-specific knowledge

A generic model trained on the public internet will never understand:

- Your contracts

- Your compliance rules

- Your edge cases

- Your internal language

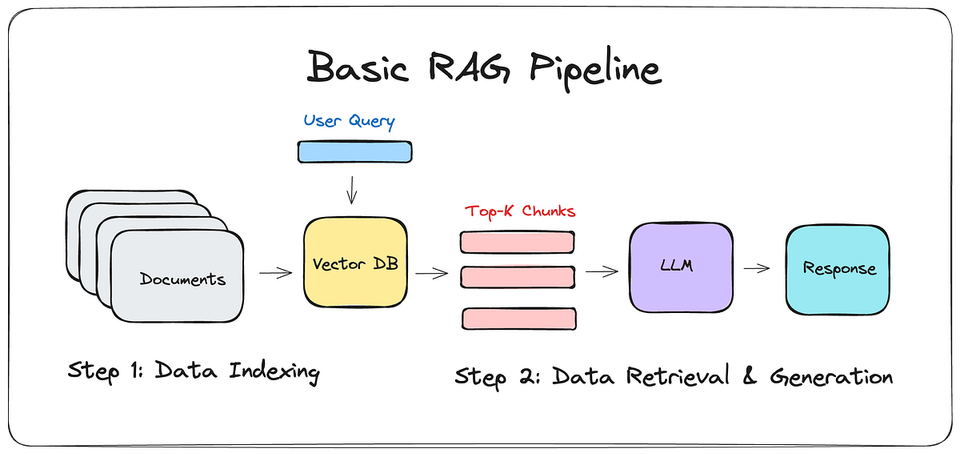

This is why Retrieval-Augmented Generation (RAG) is the most important AI pattern in production today.

RAG: Where AI Becomes Actually Useful

A RAG pipeline allows you to:

- Keep your data private

- Store it in your own vector database

- Retrieve only relevant context

- Let the model reason over your data, not replace it

In other words: The AI does not “know” anything. It retrieves and reasons, just like a good employee.

This is not a ChatGPT feature. This is a system design choice.

Why Open Source Models Matter More Than Ever

Open-source models (Mistral, LLaMA variants, Qwen, etc.) change the power dynamic completely.

They allow organizations to:

- Run models on-premise or in private cloud

- Control data residency

- Audit behavior

- Customize performance

- Avoid vendor lock-in

Most importantly: They separate AI capability from Big Tech control. This is not an ideological argument, it’s a strategic one.

Mistral + ASML

The Mistral story is often misunderstood. This is not “Europe copying OpenAI.”This is Europe doing something fundamentally different. There is a reason both companies are in a strategic partnership.

Why Mistral Matters

- Open-weight models

- Strong performance-per-parameter

- Optimized for deployment, not just benchmarks

- Designed to be used, not just showcased

Why ASML Matters

ASML is not an AI company — and that’s the point. ASML understands:

- Strategic infrastructure

- Long-term sovereignty

- Industrial dependency risk

By supporting Mistral, Europe is signaling something very clear:

AI is infrastructure — not a consumer app.

Just like:

- Chips

- Energy

- Telecom

- Manufacturing tooling

AI is now strategic capacity.

Hyperscale AI vs European AI: Two Philosophies

Silicon Valley Model

- Centralized

- Cloud-only

- Closed weights

- Data extraction

- Growth-at-all-costs

- Consumer-first

European Model (Emerging)

- Federated

- On-prem / private cloud

- Open or semi-open

- Data sovereignty

- B2B-first

- Compliance-by-design

These are not competing products , they are competing worldviews.

Why On-Prem AI Is Not “Old-School”, It’s the Future

On-prem AI is often dismissed as:

- Too complex

- Too expensive

- Too slow

This is outdated thinking. Modern on-prem AI stacks:

- Use containerization

- Scale horizontally

- Integrate with automation tools (n8n, Make, Airflow)

- Run smaller, more efficient models

- Cost less over time than SaaS subscriptions

For SMEs and enterprises, on-prem AI means:

- Predictable costs

- No data leakage

- Full customization

- Regulatory confidence

AI as an Employee, Not a Product

The most successful AI implementations I see are not “tools.”

They are:

- AI agents handling repetitive analysis

- AI copilots embedded in workflows

- AI systems preparing decisions, not making them

- AI layers that disappear into operations

This is where automation + AI converge. ChatGPT alone can’t do this. RAG + open models + automation pipelines can.

Why ChatGPT Is Still Useful (But Not the Point)

To be clear: ChatGPT is an excellent interface.

It is:

- Great for ideation

- Great for prototyping

- Great for accessibility

- Great for learning

But confusing ChatGPT with AI is like confusing:

- Excel with finance

- PowerPoint with strategy

- Email with communication

It’s a surface layer, not the system.

The Strategic Question Every European Business Should Ask

Not:

“How do we use ChatGPT?”

But:

“Where do we want intelligence to live in our organization?”

- In someone else’s cloud?

- Or inside our own systems?

So, the future of AI is not:

- One giant model

- One chat window

- One hyperscaler

The future of AI is:

- Modular

- Open

- Private

- Embedded

- Context-aware

- European-by-design

ChatGPT didn’t invent AI. It just made it visible. Now it’s time to build it properly. And Europeans are perfectly fine capable of doing that.